a shut mouth catches no flies ...

... but can make a business process go bust.

Just like in human communication, it is rather harmful in server communication when one partner stops communicating entirely and doesn't respond to any messages at all.

I was asked to troubleshoot a problem with a batch job. This job was running fine in the past years but all of a sudden it crashed every night.

Everyone involved in the business process, which is mapped by this job, claimed that nothing had changed. The devs hadn't changed the code, the DBAs said the Database ran fine, the sysadmins said there were no error reports besides sporadic application timeouts. The network guys said: everything runs within normal parameters.

What is even more interesting is the fact that the job crashed when it was started at its normal time before 01 am but ran through when it was repeated at 06 am.

Why was it repeated? Because the data it produces are urgently needed in the morning. If the job can't be started before 01 am further processes in the company are also delayed and a lot of extra work is caused.

So it is really an exciting problem, which no one was able to solve yet and that has to be solved fast!

To be able to follow the post more easily here is a brief introduction of the systems and software involved:

| batch server (BS) | application server(AS/82) | NSCALE archive server (NS/95) | |

| software | runs the batch | jboss to access the archive system (Ceyoniq server interface / TCP-connectionpool) | Ceyoniq NSCALE |

| kind | JAVA | JAVA | unknown |

| OS | AIX | Linux | Windows Server |

| IP Address (obfuscated) | XXX.XXX.XXX.49 | XXX.XXX.XXX.82 | XXX.XXX.XXX.95 |

javax.net.ssl.SSLException: A system call received a parameter that is not valid. (Read failed)

at com.ibm.jsse2.g.a(g.java:22)

at com.ibm.jsse2.ba.a(ba.java:38)

at com.ibm.jsse2.ba.a(ba.java:101)

at com.ibm.jsse2.ba.a(ba.java:62)

at com.ibm.jsse2.bi.a(bi.java:322)

at com.ibm.jsse2.bi.access$400(bi.java:368)

at com.ibm.jsse2.bi$b.read(bi$b.java:50)

at java.io.BufferedInputStream.fill(BufferedInputStream.java:257)

at java.io.BufferedInputStream.read1(BufferedInputStream.java:297)

at java.io.BufferedInputStream.read(BufferedInputStream.java:356)

at sun.net.www.http.HttpClient.parseHTTPHeader(HttpClient.java:766)

at sun.net.www.http.HttpClient.parseHTTP(HttpClient.java:709)

at sun.net.www.http.HttpClient.parseHTTP(HttpClient.java:737)

at sun.net.www.protocol.http.HttpURLConnection.getInputStream0(HttpURLConnection.java:1605)

at sun.net.www.protocol.http.HttpURLConnection.getInputStream(HttpURLConnection.java:1510)

at java.net.HttpURLConnection.getResponseCode(HttpURLConnection.java:491)

at com.ibm.net.ssl.www2.protocol.https.b.getResponseCode(b.java:56)

...

Suppressed: java.net.SocketException: A system call received a parameter that is not valid. (Write failed)

at java.net.SocketOutputStream.socketWrite(SocketOutputStream.java:122)

at java.net.SocketOutputStream.write(SocketOutputStream.java:166)

at com.ibm.jsse2.f.a(f.java:130)

at com.ibm.jsse2.ba.a(ba.java:222)

... 22 more

Caused by: java.net.SocketException: A system call received a parameter that is not valid. (Read failed)

at java.net.SocketInputStream.socketRead(SocketInputStream.java:127)

at java.net.SocketInputStream.read(SocketInputStream.java:182)

at java.net.SocketInputStream.read(SocketInputStream.java:152)

at com.ibm.jsse2.aj.a(aj.java:184)

at com.ibm.jsse2.aj.g(aj.java:102)

at com.ibm.jsse2.aj.d(aj.java:39)

at com.ibm.jsse2.bi.a(bi.java:77)

at com.ibm.jsse2.bi.access$300(bi.java:111)

at com.ibm.jsse2.bi$b.read(bi$b.java:78)

... 17 more

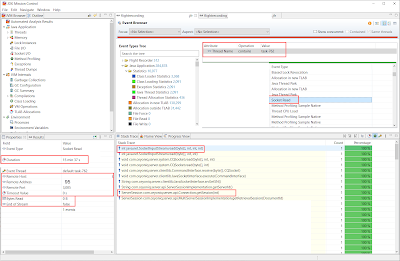

The JFR revealed aborted socket reads (bytes read: 0 / end of stream: false ) that took more than 15 minutes. These reads try to fetch documents from the NS/95. The screenshot shows the information for one socket read done by the thread task-762.

Furthermore a javax.transaction.RollbackException is thrown by the same thread (task-762) indicating that the transaction was marked for rollback. The transaction timeout is set to 10 minutes in the jboss.

In short: the batch job aborts because the transactions which it triggers in the AS/82 are running longer than the set transaction timeout. And we know that it's not the fault of the AS/82 that the transaction is running this long.

NS/95 must be responsible for the socket read taking 15 Min and 37 seconds because AS/82 is passively waiting for data to arrive. The next logical step is to trace what is happening at the NS/95 in the meantime.

In order to troubleshoot an unknown software on a windows host we use Windows Performce Recorder (WPR) in combination with Windows Performance Analyser (WPA). During the subsequent batch run (and crash), a WPR trace was recorded on the NS/95.

And here too, a direct hit.

WPR has recorded TCP/IP ConnectAborts on connections where the remote is the AS/82. The process server.exe is the NSCALE archive software running on that windows machine (NS/95).

There were no other abnormalities: no spikes in CPU or long running methods. It appears that there may be a network issue, as the AS/82 experiences socket read stalls at night, and the NS/95 detected connectivity problems with the AS/82.

What is happening at the "wires" connecting the two servers? The most effective way to investigate this is by utilizing tcpdump and Wireshark.

Initially, my colleagues were reluctant to utilize tcpdump, as they believed it to be a "hacker tool" that captures all data, including sensitive client information. However, I argued that this data was not relevant to our troubleshooting efforts. Tcpdump can be configured to solely capture the TCP/IP header of every packet by limiting the capture to only the first n bytes.

The following excerpt shows a recurring pattern of the AS/82 tcpdumps :

1 000.000 XXX.XXX.XXX.82 44568 XXX.XXX.XXX.95 3005 1509 24 44568 → 3005 [PSH, ACK] Seq=1 Ack=1 Win=1509 Len=24 2 000.200 XXX.XXX.XXX.82 44568 XXX.XXX.XXX.95 3005 1509 24 [TCP Retransmission] 44568 → 3005 [PSH, ACK] Seq=1 Ack=1 Win=1509 Len=24 ... 16 924.000 XXX.XXX.XXX.82 44568 XXX.XXX.XXX.95 3005 1509 24 [TCP Retransmission] 44568 → 3005 [PSH, ACK] Seq=1 Ack=1 Win=1509 Len=24

The AS/82 sends data to the NS/95 on port 3005. NSCALE listens on this port (first line).

According to the TCP/IP standard, the subsequent step should involve the NS/95 responding with either an ACK or data, along with a "piggyback ACK" to acknowledge the packet on line one.

Instead, the AS/82 attempts to retransmit the data. Could this indicate a malfunction in the NS/95?

To verify, we examined the tcpdump on the NS/95 during the same timeframe.

Surprisingly: the packets from AS/82 are all arriving including the retransmits. But the NS/95 keeps its "mouth" shut and doesn't reply at all.

Mind blown!

At least we would have anticipated the NS/95 to transmit a RST in order to signify an invalid connection. Here we also reencountered the 15 minutes from the JFR. The default in Linux is to try to retransmit packets up to 924 seconds (15 times with ever increasing gaps between the retries). If the other side doesn't reply during this timeframe, Linux abandons this connection and tells the calling program: broken pipe.

What do we currently know?

The batch job sends a request to the AS/82. AS/82 starts a transaction and requests data from NS/95. NS/95 receives the request but doesn't reply. This leads to a rollback for the transaction and the batch job goes bust. In the morning everything runs smoothly. The questions that arise are: What causes NS/95 not to reply? Why does it only work in certain time frames?

Thinking about it, we had the idea that it might be a firewall related problem. We read documentations about the Windows Server firewall and learned that it is a so called stealth firewall. This type of firewall deliberately violates the TCP/IP standard by remaining unresponsive when it receives a packet on a port without an assigned socket, or in other words, when there is no active connection on the specified port. This is done to prevent port scanners from gathering information about servers.

Once more, this would suggest that there is no active connection between the AS/82 and NS/95 when the issue arises. However, how can there be no connection if the AS/82 is capable of successfully sending data (arriving retransmits) on a socket to the NS/95, as proven by the tcpdumps.

To examine the presence of connections and their respective states, we performed a 24-hour netstat check, running netstat every minute on both the AS/82 and NS/95.

left netstat AS/82 / right netstat NS/95 ... 20.01.2023 22:00 TCP XXX.XXX.XXX.82:46000 XXX.XXX.XXX.95:3005 ESTABLISHED TCP XXX.XXX.XXX.95:3005 XXX.XXX.XXX.82:46000 ESTABLISHED TCP XXX.XXX.XXX.82:55000 XXX.XXX.XXX.95:3005 ESTABLISHED TCP XXX.XXX.XXX.95:3005 XXX.XXX.XXX.82:55000 ESTABLISHED ... 20.01.2023 23:36 TCP XXX.XXX.XXX.82:46000 XXX.XXX.XXX.95:3005 ESTABLISHED TCP XXX.XXX.XXX.82:55000 XXX.XXX.XXX.95:3005 ESTABLISHED ... 21.01.2023 00:42 TCP XXX.XXX.XXX.82:46000 XXX.XXX.XXX.95:3005 ESTABLISHED ... 20.01.2023 01:49

At 10:00 pm the sockets that form the connections are in state established on both servers.

At 11:36 pm the sockets are gone on the NS/95 side but the AS/82 still has its sockets in state established. So something in windows shuts down its sockets at a certain point in time without telling the other side. Again a violation of the TCP/IP standard (no FIN or RST sent - confirmed by tcpdumps).

We searched for ways to debug this on windows but couldn't come up with something that was sophisticated. We hoped for something like in JFR: When a certain event happens (connection abort?), windows could show the stack that leads to this event so we could identify the code that was responsible for the problem. Also the Microsoft support wasn't able to help. We thought about misbehaving firewall rules, but couldn't find any.

!!! If anyone has experience solving problems of this nature on Windows, please get in touch with me. I'm eager to expand my knowledge on the subject !!!

Despite the setback, we wanted to find a solution or at least a workaround.

On the AS/82 side some of the sockets are gone between 00 am and 01 am and some are gone later. The ones gone first are those closed after the failed retransmits done by the AS/82 described early.

But obviously there must be a second mechanism in place that makes Linux aware of broken connections. Perhaps if we understand that mechanism we could exploit it for a workaround for our problem. If the sockets are gone that have no counterpart in the NS/95 before the batch starts, new working connections might be created (in the AS/82 TCP-connectionpool), when the batch job needs a connection.

In the meantime, it turned out that something had changed before the problem occurred for the first time. The NS/95 had been replaced by a new server with Windows Server 2022 (previously 2016).

So adding that to things we already know we have found our troublemaker: Windows Server 2022

Back to the problem. Let's find out what is going on the AS/82 network layer between 11:00 pm and 02 am the next day to identify the mentioned second mechanism. Here is the tcpdump that was taken to investigate. I have already annotated what is going on:

The first line shows a keepalive that the AS/82 received from the NS/95 and it immediately replied with an ACK for that package (second line). Then there is radio silence for two hours (7200 seconds). We know why: Windows has killed the socket on its side without sending an RST or FIN to the AS/82. After the two hours the AS/82 sends 9 keepalives to the NS/95 in an interval of 75 seconds.

Because NS/95 doesn't react to the keepalives the AS/82 sends a RST to the NS/95 (last line) indicating that it will terminate the connection and the socket will also be closed on its side. The application server then gets a broken pipe message.

This behavior is controlled by the three settings in Linux highlighted in the screenshot.

These settings can be used to workaround the problem. So we set them to the following settings:

tcp_keepalive_time 540 tcp_keepalive_probes 6 tcp_keepalive_intvl 10

The next time the batch ran just fine before 01 am. The "dead" sockets are now sorted out at around 11:46 pm. And when the batch starts the AS/82 has no broken connections in its connectionpool and everything works just fine.

Summary

Windows Server handles TCP-connections differently in version 2022 than in version 2016, resulting in changes that affect connections to AS/82. At precisely 11:36 pm every day, Windows Server 2022 closes connections to AS/82, causing AS/82 to continue using the connections without realizing they are closed. If AS/82 tries to send data through one of these connections, it will not receive any response from NS/95 due to its stealth firewall. Consequently, AS/82 goes into a 15-minute retransmit loop. Within these 15 minutes, the application server's transaction timeout expires, aborting any ongoing batch that started before 01 am. However, if the batch starts at 06 am, Linux default keepalive settings of (11:36 pm + 132 minutes (7200+9*75/60) ) have ensured that there are no more broken connections , allowing the batch to run smoothly.

With the new settings, Linux identifies dead connections after only 10 minutes (540+6*10/60)…

An alternative solution would be to set java.net.Socket#setSoTimeout() in the code of the application server to a value smaller than the jboss transaction timeout. If a connection were dead, the control would return to the application after this time expired, and it could attempt to establish a new connection.

Today, you cannot rely on business partners such as Microsoft, so it is crucial to make yourself and your code resilient to the behavior of third parties. The only people you can depend on are your colleagues, and I appreciate the excellent cooperation on this fascinating case.

Comments

Post a Comment